Building a Face Detection Web API in Node.js

Introduction

As a follow-up to my previous article on how to use your webcam for face detection with OpenCV, I’d like to show you how you can create your own web API for that.

There are a few Node.js modules out there that do just that. A few of them even provide bindings for OpenCV so you can use it directly from Javascript.

The catch is that most of these modules either rely directly on binaries or they need to be built for your machine from a makefile or a Visual Studio project, etc. That’s why some of them work on Windows for example, but not on Mac, or vice-versa.

The objective of this article is to show you the steps needed to create such a module for yourself so that you can customize it for your machine specifically. What we’re going to do is create a native Node.js add-on and a web server that will use that add-on to detect faces and show them to you.

Prerequisites

I’ve built this on a MacBook Pro running OS X El Capitan Version 10.11.1.

Since we’re going to use OpenCV you’ll need to set this up for your machine, I’ve described how to do this in this article.

Next, we’ll need Node.js which you can get from here. This will also install NPM (the package manager for node) which we need to install some extra node modules.

The next thing we need is node-gyp which you can install using npm. But before you do that make sure you have all the dependencies required which are described here. For Mac they are python 2.7, xcode, gcc and make. So basically if you followed the OpenCV installation guide you should be good on everything except python which you should install. After that you can install node-gyp like this :

npm install -g node-gyp

Node-gyp is used to generate the appropriate files needed to build a native node.js add-on.

That’s pretty much it. Next up, we’ll generate a simple native add-on.

Setting up

First, we need to create a folder for the node project, I’m doing this in my home directory :

mkdir ~/node-face-detect && cd ~/node-face-detect

Now we need a folder to hold the native module and navigate to it :

mkdir face-detect && cd face-detect

Node-gyp uses a file which specifies the target module name, source files, includes and libraries and other cflags to use when building the module. We need to create that file and call it binding.gyp. It’s contents should look like this :

{

"targets": [

{

"target_name": "face-detect",

"cflags" : [ "-std=c++1", "-stdlib=libc++" ],

"conditions": [

[ 'OS!="win"', {

"cflags+": [ "-std=c++11" ],

"cflags_c+": [ "-std=c++11" ],

"cflags_cc+": [ "-std=c++11" ],

}],

[ 'OS=="mac"', {

"xcode_settings": {

"OTHER_CPLUSPLUSFLAGS" : [ "-std=c++11", "-stdlib=libc++" ],

"OTHER_LDFLAGS": [ "-stdlib=libc++" ],

"MACOSX_DEPLOYMENT_TARGET": "10.11"

},

}],

],

"sources": [ "src/face-detect.cpp" ],

"include_dirs": [

"include", "/usr/local/include"

],

"libraries": [

"-lopencv_core",

"-lopencv_imgproc",

"-lopencv_objdetect",

"-lopencv_imgcodecs",

"-lopencv_highgui",

"-lopencv_hal",

"-lopencv_videoio",

"-L/usr/local/lib",

"-llibpng",

"-llibjpeg",

"-llibwebp",

"-llibtiff",

"-lzlib",

"-lIlmImf",

"-llibjasper",

"-L/usr/local/share/OpenCV/3rdparty/lib",

"-framework AVFoundation",

"-framework QuartzCore",

"-framework CoreMedia",

"-framework Cocoa",

"-framework QTKit"

]

}

]

}Node-gyp still has some hiccups on Mac OS X and will use only either cc or c++ by default when building (instead of gcc/g++ or whatever you have configured).

Now we use node-gyp to generate the project files :

node-gyp configure

The native module

As specified in the binding.gyp file, we now need to create the source file of the native module i.e. src/face-detect.cpp.

Here is the source code for that :

//Include native addon headers

#include <node.h>

#include <node_buffer.h>

#include <v8.h>

#include <vector>

//Include OpenCV

#include <opencv2/opencv.hpp>

void faceDetect(const v8::FunctionCallbackInfo<v8::Value>& args) {

v8::Isolate* isolate = args.GetIsolate();

v8::HandleScope scope(isolate);

//Get the image from the first argument

v8::Local<v8::Object> bufferObj = args[0]->ToObject();

unsigned char* bufferData = reinterpret_cast<unsigned char *>(node::Buffer::Data(bufferObj));

size_t bufferLength = node::Buffer::Length(bufferObj);

//The image decoding process into OpenCV's Mat format

std::vector<unsigned char> imageData(bufferData, bufferData + bufferLength);

cv::Mat image = cv::imdecode(imageData, CV_LOAD_IMAGE_COLOR);

if(image.empty())

{

//Return null when the image can't be decoded.

args.GetReturnValue().Set(v8::Null(isolate));

return;

}

//OpenCV saves detection rules as something called a CascadeClassifier which

// can be used to detect objects in images.

cv::CascadeClassifier faceCascade;

//We'll load the lbpcascade_frontalface.xml containing the rules to detect faces.

//The file should be right next to the binary of the native addon.

if(!faceCascade.load("lbpcascade_frontalface.xml"))

{

//Return null when no classifier is found.

args.GetReturnValue().Set(v8::Null(isolate));

return;

}

//This vector will hold the rectangle coordinates to a detection inside the image.

std::vector<cv::Rect> faces;

//This function detects the faces in the image and places the rectangles of the faces in the vector.

//See the detectMultiScale() documentation for more details about the rest of the parameters.

faceCascade.detectMultiScale(

image,

faces,

1.09,

3,

0 | CV_HAAR_SCALE_IMAGE,

cv::Size(30, 30));

//Here we'll build the json containing the coordinates to the detected faces

std::ostringstream facesJson;

facesJson << "{ \"faces\" : [ ";

for(auto it = faces.begin(); it != faces.end(); it++)

{

if(it != faces.begin())

facesJson << ", ";

facesJson << "{ ";

facesJson << "\"x\" : " << it->x << ", ";

facesJson << "\"y\" : " << it->y << ", ";

facesJson << "\"width\" : " << it->width << ", ";

facesJson << "\"height\" : " << it->height;

facesJson << " }";

}

facesJson << "] }";

//And return it to the node server as an utf-8 string

args.GetReturnValue().Set(v8::String::NewFromUtf8(isolate, facesJson.str().c_str()));

}

void init(v8::Local<v8::Object> target) {

NODE_SET_METHOD(target, "detect", faceDetect);

}

NODE_MODULE(binding, init);

Basically what this code does is register a method to our module. The method gets the first parameter as a buffer, decodes it to an OpenCV Mat image, detects the faces within the image using the classifier (which should be placed next to the binary), and returns a JSON string containing the coordinates of the faces found in the image.

Now that we have all the pieces in place for the native module, we can build it using :

node-gyp build

If everything goes well, in the folder ./build/Release you should find a file called face-detect.node. This file represents our native module and we should now be able to require it in our javascript files. Also, next to this file, we need to copy the lbpcascade_frontalface.xml from the OpenCV source folder under /data/lbpcascades/.

The Server

Now we have to create the server.js file for the node server. We should load the native add-on for face detection, create a server that will listen to PUT requests and call the native add-on on the contents of these requests. The code for that should look like this :

//The path to our built native add-on

var faceDetect = require('./face-detect/build/Release/face-detect');

var http = require('http');

//Our web server

var server = http.createServer(function (request, response) {

//Respond to PUT requests

if (request.method == 'PUT')

{

request.setEncoding('binary');

//Collect body chunks

var body = null;

request.on('data', function (data) {

if(null == body)

body = data;

else

body += data;

//Destroy the connection if the file is too big to handle

if (body.length > 1e6)

request.connection.destroy();

});

//All chunks have been sent

request.on('end', function () {

//Create a node buffer from the body to send to the native add-on

var bodyBuffer = new Buffer(body, "binary");

//Call the native add-on

var detectedFaces = faceDetect.detect(bodyBuffer);

if(null == detectedFaces)

{

//Unsupported image format or classifier missing

response.writeHead(500, {'Content-Type': 'applcation/json'});

response.end('{"error" : "internal server error"}');

}

else

{

//Faces detected

response.writeHead(200, {'Content-Type': 'applcation/json'});

response.end(detectedFaces);

}

});

}

else

{

//Unsupported methods

response.writeHead(405, {'Content-Type': 'applcation/json'});

response.end('{"error" : "method not allowed"}');

}

});

//Start listening to requests

server.listen(7000, "localhost");To start the server just run :

node server.js

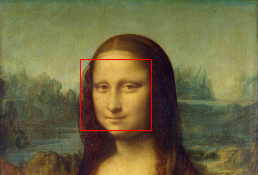

Test it out

Save an image containing human faces as image.jpg. Then, using curl from the command line send the image via a PUT request to the node server like this :

curl -i -X PUT http://localhost:7000/ -H "Content-Type: application/octet-stream" --data-binary "@image.jpg"

Depending on the image you send, you should see something like this :

HTTP/1.1 200 OK

Content-Type: applcation/json

Date: Wed, 17 Feb 2016 07:19:44 GMT

Connection: keep-alive

Transfer-Encoding: chunked

{ "faces" : [ { "x" : 39, "y" : 91, "width" : 240, "height" : 240 }] }Conclusion

Sometimes Node.js libraries might not meet your application needs or they might not fit your machine resulting in errors during npm install. When that happens, you can write your own custom native Node.js add-on to address those needs and hopefully, this article showed you that it’s possible.

As an exercise you can try changing this application to return an image with rectangles surrounding the detected faces. If you’re having trouble returning a new buffer from inside the native add-on, try returning the image as Data URI string.

Build a Face Detector on OS X Using OpenCV and C++

Building and using C++ libraries can be a daunting task, even more so for big libraries like OpenCV. This article should get you started with a minimal build of OpenCV and a sample application written in C++.

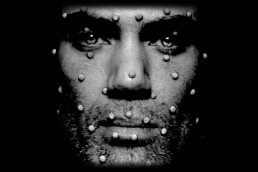

This application will get images from the webcam, draw rectangles around the faces in the images and show them to you on screen

Build a Face Detector on OS X Using OpenCV and C - Requirements

I've built this on a MacBook Pro running OS X El Capitan Version 10.11.1.

We'll be using the GNU C++ compiler (g++) from the command line. Note that you should still have Xcode installed (I have Xcode 7.1 installed).

Here's what you need to do :

- Get "OpenCV for Linux/Mac" from the OpenCV Downloads Page I got version 3.0.

- Extract the contents of the zip file from step 1 to a folder of your choosing (I chose ~/opencv-3.0.0).

- Get a binary distribution of Cmake from the Cmake Downloads Page I got cmake-3.4.0-Darwin-x86_64.dmg.

- Install Cmake.

Building OpenCV

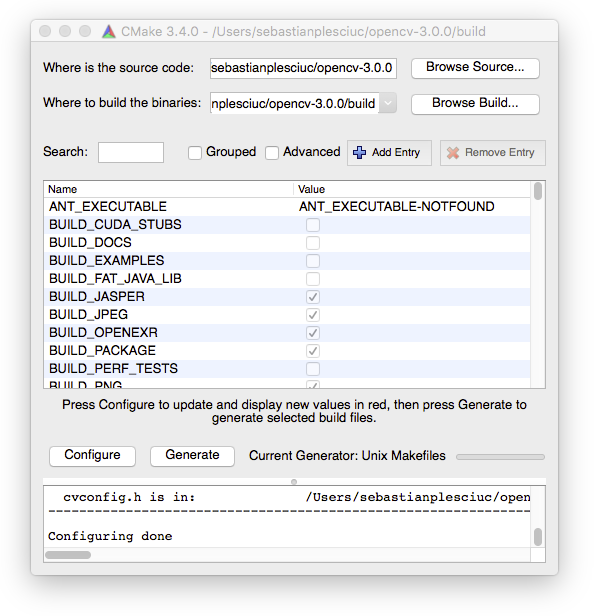

OpenCV uses CMake files to describe how the project needs to be built. CMake can transform these files into actual project settings (e.g. an Xcode project, Unix makefiles, a Visual Studio project, etc.) depending on the generator you choose.

First open CMake and a small window will pop-up that will let you choose your build options based on the CMakeList.txt files in the opencv source directory. First click on the Browse Source... button and choose the path to the opencv source folder (the folder you extracted the zip file to at step 2). Then click on the Browse Build... button and choose a path to a build folder, I'm going to create a new folder called build in the previously mentioned source folder.

If at any point you are prompted to choose a generator, pick Unix Makefiles. If the paths you chose were correct, after you click the Configure button, you should be looking at something like this :

For a somewhat minimal OpenCV build, make sure you only have the following options enabled :

- BUILD_JASPER

- BUILD_JPEG

- BUILD_OPENEXR

- BUILD_PACKAGE

- BUILD_PNG

- BUILD_TIFF

- BUILD_WITH_DEBUG_INFO

- BUILD_ZLIB

- BUILD_opencv_apps

- BUILD_opencv_calib3d

- BUILD_opencv_core

- BUILD_opencv_features2d

- BUILD_opencv_flann

- BUILD_opencv_hal

- BUILD_opencv_highgui

- BUILD_opencv_imgcodecs

- BUILD_opencv_imgproc

- BUILD_opencv_ml

- BUILD_opencv_objdetect

- BUILD_opencv_photo

- BUILD_opencv_python2

- BUILD_opencv_shape

- BUILD_opencv_stitching

- BUILD_opencv_superres

- BUILD_opencv_ts

- BUILD_opencv_video

- BUILD_opencv_videoio

- BUILD_opencv_videostab

- ENABLE_SSE

- ENABLE_SSE2

- WITH_1394

- WITH_JASPER

- WITH_JPEG

- WITH_LIBV4L

- WITH_OPENEXR

- WITH_PNG

- WITH_TIFF

- WITH_V4L

- WITH_WEBP

You should disable the options that are not in the list, especially the BUILD_SHARED_LIBS one. Don't touch the options that are text fields unless you know what you're doing.

Most of these options you don't need for this particular exercise, but it will save you time by not having to rebuild OpenCV should you decide to try something else.

Once you have selected the settings above, click Generate. Now you can navigate to the build folder, I'll do so with cd ~/opencv-3.0.0/build/ and run make to build OpenCV.

Installing OpenCV

If everything goes well, after the build finishes, run make install to add the OpenCV includes to the /usr/local/include folder and the libraries to the /usr/local/lib and /usr/local/share/OpenCV/3rdparty/lib folders.

After that's done, you should be able to build your own C++ applications that link against OpenCV.

The Face Detector Application

Now let's try to build our first application with OpenCV

Here's the code and comments that explain how to do just that :

#include <iostream>

//Include OpenCV

#include <opencv2/opencv.hpp>

int main(void )

{

//Capture stream from webcam.

cv::VideoCapture capture(0);

//Check if we can get the webcam stream.

if(!capture.isOpened())

{

std::cout << "Could not open camera" << std::endl;

return -1;

}

//OpenCV saves detection rules as something called a CascadeClassifier which

// can be used to detect objects in images.

cv::CascadeClassifier faceCascade;

//We'll load the lbpcascade_frontalface.xml containing the rules to detect faces.

//The file should be right next to the binary.

if(!faceCascade.load("lbpcascade_frontalface.xml"))

{

std::cout << "Failed to load cascade classifier" << std::endl;

return -1;

}

while (true)

{

//This variable will hold the image from the camera.

cv::Mat cameraFrame;

//Read an image from the camera.

capture.read(cameraFrame);

//This vector will hold the rectangle coordinates to a detection inside the image.

std::vector<cv::Rect> faces;

//This function detects the faces in the image and

// places the rectangles of the faces in the vector.

//See the detectMultiScale() documentation for more details

// about the rest of the parameters.

faceCascade.detectMultiScale(

cameraFrame,

faces,

1.09,

3,

0 | CV_HAAR_SCALE_IMAGE,

cv::Size(30, 30));

//Here we draw the rectangles onto the image with a red border of thikness 2.

for( size_t i = 0; i < faces.size(); i++ )

cv::rectangle(cameraFrame, faces[i], cv::Scalar(0, 0, 255), 2);

//Here we show the drawn image in a named window called "output".

cv::imshow("output", cameraFrame);

//Waits 50 miliseconds for key press, returns -1 if no key is pressed during that time

if (cv::waitKey(50) >= 0)

break;

}

return 0;

}

I saved this as main.cpp. To build it I used the following command :

g++ -o main main.cpp -I/usr/local/include \ -L/usr/local/lib -lopencv_core -lopencv_imgproc -lopencv_objdetect \ -lopencv_imgcodecs -lopencv_highgui -lopencv_hal -lopencv_videoio \ -L/usr/local/share/OpenCV/3rdparty/lib -llibpng -llibjpeg -llibwebp \ -llibtiff -lzlib -lIlmImf -llibjasper -framework AVFoundation -framework QuartzCore \ -framework CoreMedia -framework Cocoa -framework QTKit

Hopefully, no errors should occur.

Conclusions

Build a Face Detector on OS X Using OpenCV and C? Before running the application, you have to copy the lbpcascade_frontalface.xml next to the main file. You can find this file in the OpenCV source folder under /data/lbpcascades/. You can also find some other cascades to detect eyes, cat faces, etc.

Now just run the ./main and enjoy!